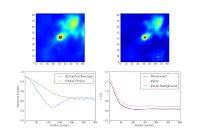

Aperture Photometry on isolated and not-so-isolated sources in the Herschel-based BGPS simulation using the L=111 field for the "noise". Depending on the aperture, our flux recovery can be really really low. The images should give an idea of the S/N. Background subtraction means subtracting the median of the image.... it works frighteningly well in most cases.